Published: 15 Nov 2019 | Author: Matthew Oen

A well-designed SSAS tabular model is often the key ingredient in a successful analytics solution. It aids the business in delivering the type of ad-hoc analysis and on-the-fly insights that drives performance improvement and economic benefit. However, not all models are well-designed. Indeed, a poor performing cube can quickly become a burden for any organisation, negatively impacting quality of analysis and becoming a drain on valuable business resources. That’s why SSAS Tabular optimisation is so crucial for businesses wanting to get the most value out of their analytics solution.

Recently, I consulted for a large electrical merchandising business who were having some trouble with their SSAS tabular models. With national operations, it was imperative that their cubes could rapidly and reliably deliver the analysis needed for the business to confidently make strategic decisions around sales, purchasing and inventory. Memory issues and ambiguous design principles were proving to be a challenge in getting the tabular model to behave, and it was clear that I needed to tune the existing cubes with some simple optimisation techniques.

When attempting SSAS Tabular optimisation, I employ a straight-forward 5-step strategy:

This 5-step performance tuning approach guarantees that tabular model issues can be precisely identified and appropriately addressed.

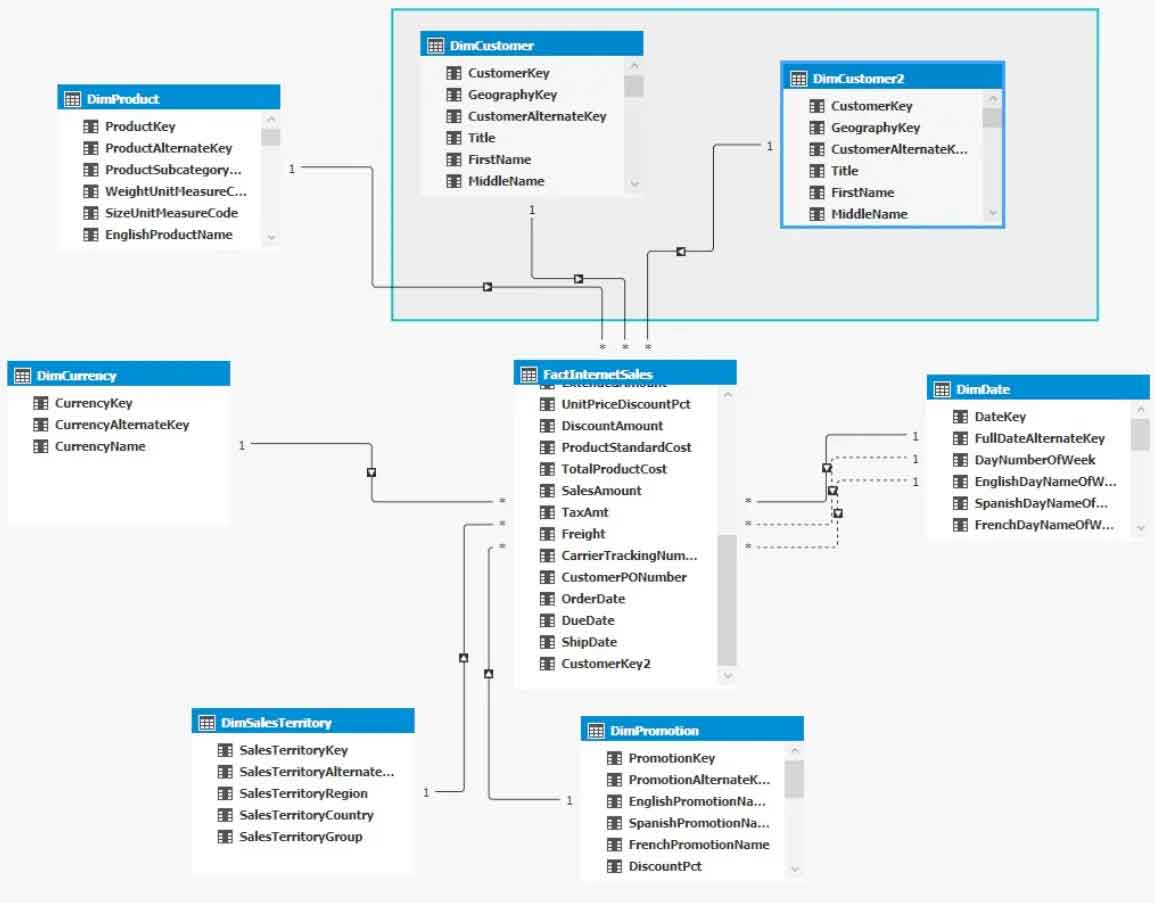

A concise tabular model is one that performs best. Therefore, the first step is to review the model itself. Very often a poor-performing cube contains unnecessary tables or relationships that provide no real value. A thorough review of what tables are present in the model and what value they bring will uncover what is necessary and what is redundant. Talking to stakeholders about what they need will also help determine what tables should go and what needs to stay. In my example, I was able to reduce the cube size by removing unnecessary dimension tables that I discovered the business was no longer interested in. This redesign process typically yields ‘quick-and-easy’ wins in terms of cube performance, as it is the easiest to implement.

Figure 1. Removing unnecessary tables reduces SSAS tabular model complexity

What data actually goes into the tables will ultimately determine the quality of the tabular model. Similar to the first step, a review of the tables will often uncover unnecessary columns that do not need to be loaded into the model. For example, columns that are never filtered on or contain largely null values. Table structure is also important to tabular model performance, as it can affect how much data needs to be loaded. For example, you could reduce the row count of the sales fact table by aggregating it to be at the invoice level, instead of invoice line level. Such a reduction in size will mean that less memory is required by the cube.

Figure 2. Tidy up tables by removing columns, and reducing rows

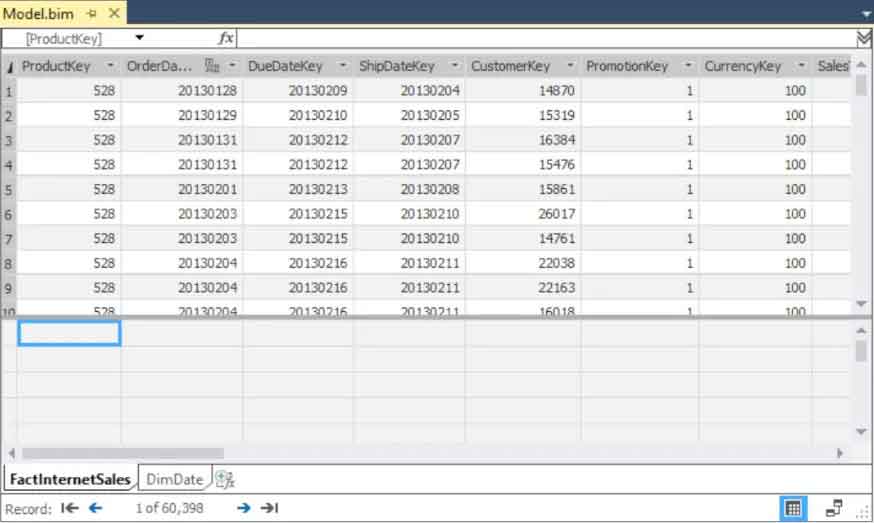

A crucial aspect of cube performance is compression. Columns with certain data types, or have unique values for all rows will compress badly, and will require more memory. An effective optimisation technique is to correct the data type or value in a column, such that it is able to compress better. Casting values as integers instead of strings or defining decimal points are fundamental practices that are often overlooked in tabular model design, and ultimately come at the expense of performance. In my example, I was able to create a new unique invoice ID that could be used by the business and compressed as an integer. Previously the varchar invoice key was unique at almost every row of the sales table, and was compressing very poorly. The storage engine (Vertipaq) wants to compress columns, and having similar values in the same column greatly aids this. A great tool for this kind of analysis is the Vertipaq Analyzer. This tool can highlight potential areas of interest in compression activities and help track results in terms of cube optimisation techniques.

Figure 3. The VertiPaq Analyzer reveals compression pain points

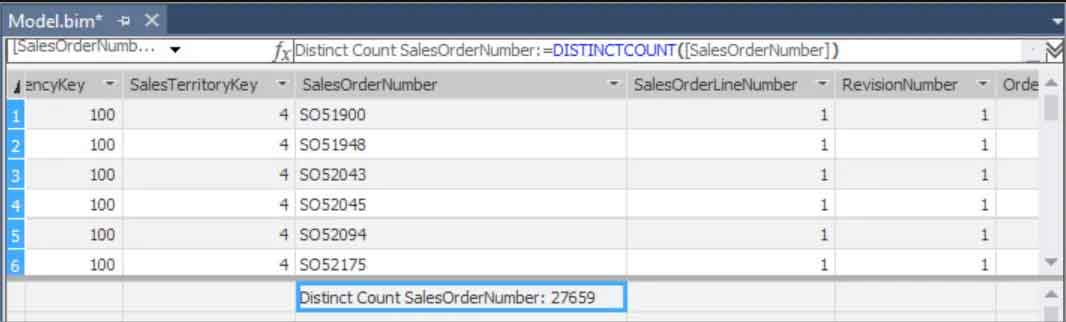

For cube users, it is critical that the OLAP queries they run return accurate results rapidly. If a user cannot get the information they need from a model in a reliable or timely manner, the cube is failing to provide the benefits expected of it. Therefore, an important part of tabular model optimisation revolves around the measures, and ensuring that the DAX expressions used are performance optimised for the formula engine. Keeping the measures simple by using basic expressions, and removing complicated filtering clauses means that the measures should perform better. In my example, I was able to change some of the expressions of sales measures at different period intervals (such as month-to-date and year-to-date), such that they could run across different filtering contexts, thus reducing calculation time.

Figure 4. Simple DAX equals better performance

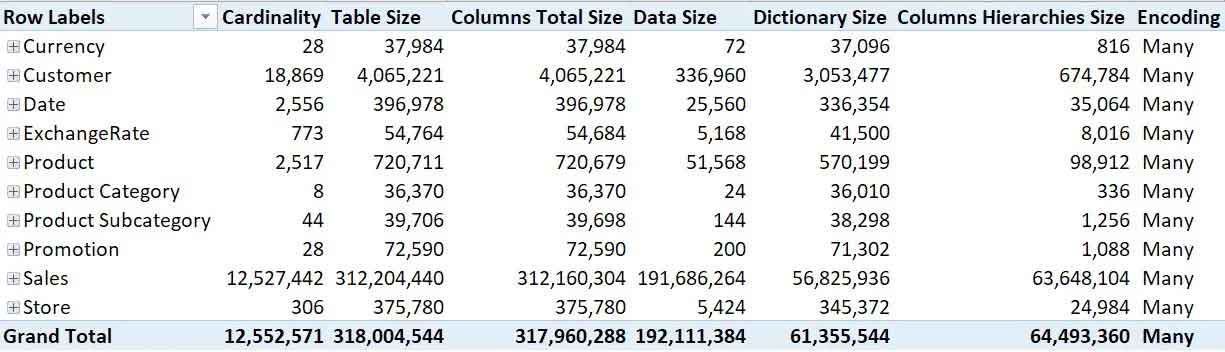

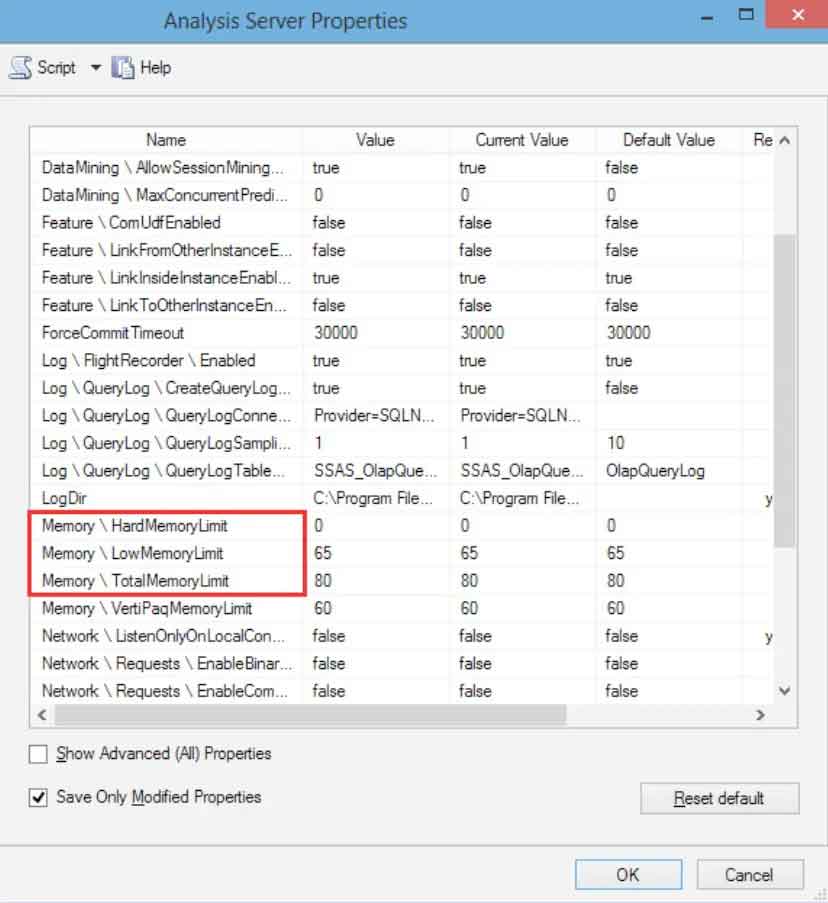

Finally, the biggest factor in tabular model processing performance is the actual memory properties. Depending on the edition of the Analysis Services, there are various levels of memory limits. For the Standard Edition, the 16Gb limit imposed on a single instance of Analysis Services can often be the ‘killer of cubes’. If a reasonable business case exists, then moving to the Enterprise Edition or cloud-based solution can be the right answer to memory woes. However, there are steps that can be taken to get the best out of a SSAS tabular model without abandoning Standard Edition altogether. Increasing the amount of RAM on the server and modifying the server instance memory properties allows you to fine tune processing and reduce the likelihood of memory exception errors. In my example, the cube was failing to process as it would run out of memory during a Full Process. I increased the RAM from 32Gb to 40Gb, and reduced the Total Memory Limits in the server instance properties. With more memory and lower thresholds to which memory cleaner processes were initiated, the cube was able to process in full each time without error.

Figure 5. Fine tune the memory limits to find the optimal level of performance

Like any business asset, a SSAS tabular model loses value when it is not properly configured or utilised. However, with the proper approach methodology, any model can be transformed from an underperforming asset into a valuable resource for a business.

If you’re having trouble with SSAS tabular optimisation, we want to hear about it! Please contact us to find out about how we can help you optimise your cubes.

Get the latest Talos Newsletter delivered directly to your inbox

Automation & Analytics Technologies for Business

Talos helps organisations build reporting solutions using Jet Analytics

Australian councils face backlogs, staff turnover, and rising community expectations. We help you improve service delivery and operational efficiency through process automation — integrated with TechnologyOne, Civica, and Pathway.

Talos helps organisations build modern data and analytics platforms using Databricks, Microsoft Fabric and Power BI. We work from strategy through implementation, ensuring you get value from the platform without unnecessary complexity.